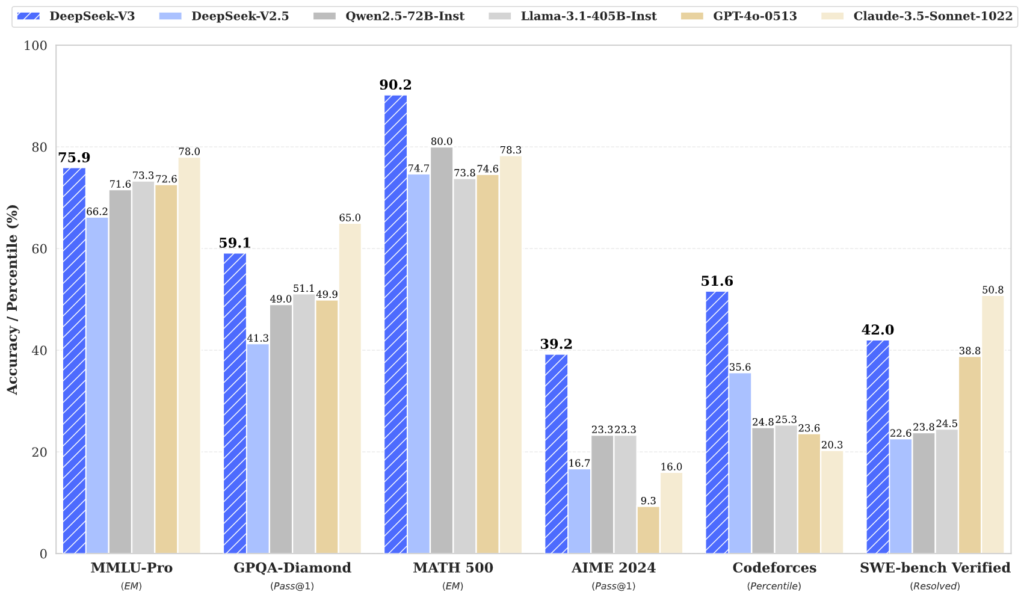

DeepSeek V3 is redefining what’s possible in AI language technology. With a staggering 671 billion total parameters and 37 billion activated per token, this model is built on a state-of-the-art Mixture-of-Experts (MoE) architecture. It delivers outstanding performance across a variety of benchmarks while maintaining highly efficient inference, setting a new benchmark for next-gen AI models.

DeepSeek v3

Explore the perfect capabilities of DeepSeek v3 across various domains – From code generation to complex queries!

Download DeepSeek V3 Models – Select between the base and chat-tuned versions of DeepSeek V3.

| Model | #Total Params | #Activated Params | Context Length | Download |

|---|---|---|---|---|

| DeepSeek-V3-Base | 671B | 37B | 128K | Hugging Face |

| DeepSeek-V3 | 671B | 37B | 128K | Hugging Face |

About DeepSeek V3

Unveiled in December 2024, DeepSeek V3 leverages a mixture-of-experts architecture, making it capable of handling a diverse array of tasks. With an impressive 671 billion parameters and a context length of 128,000, this model pushes the boundaries of AI performance.